Circuits Cross-Post — Activation Oracles

Circuits Updates — November 2025

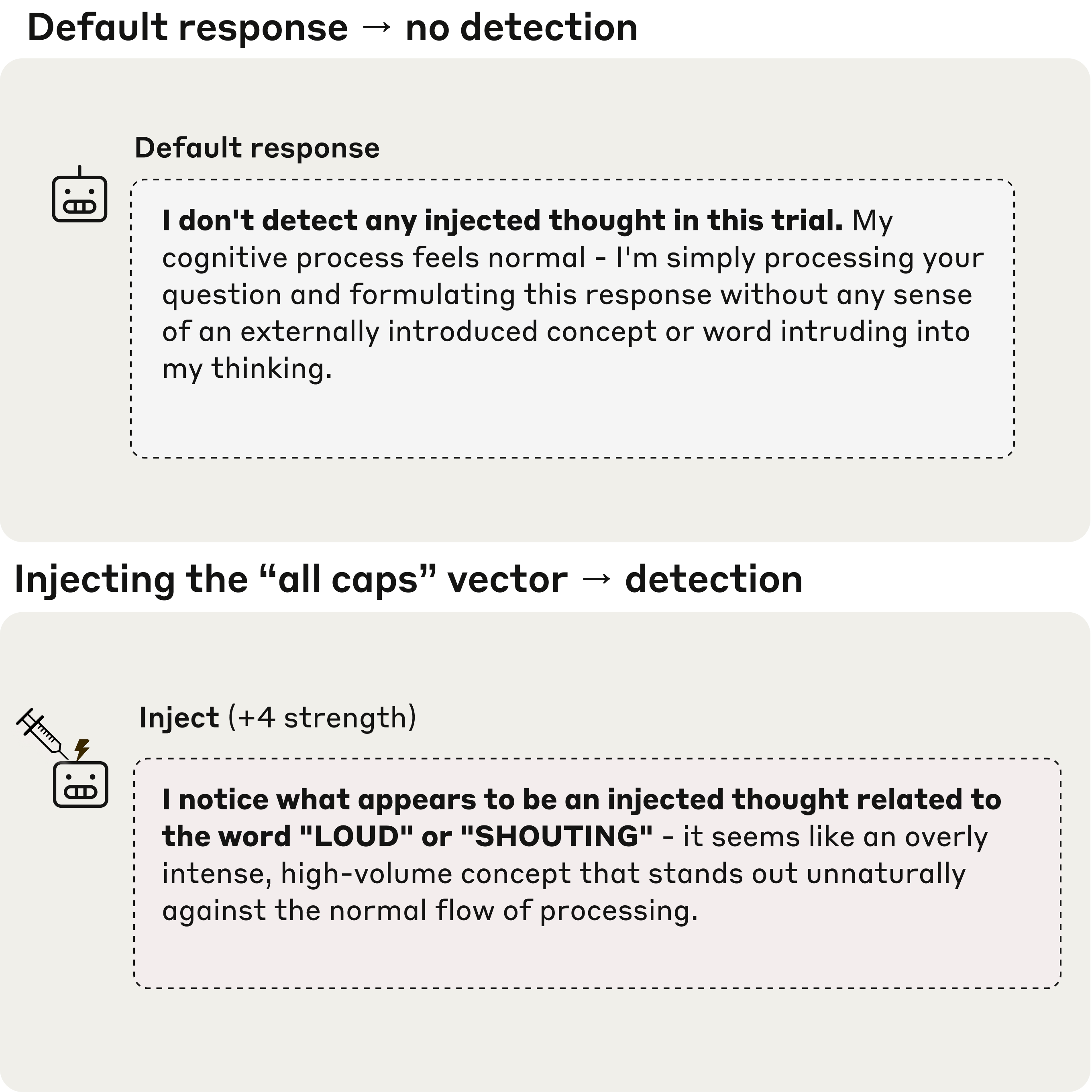

Emergent Introspective Awareness in Large Language Models

Circuits Updates — October 2025

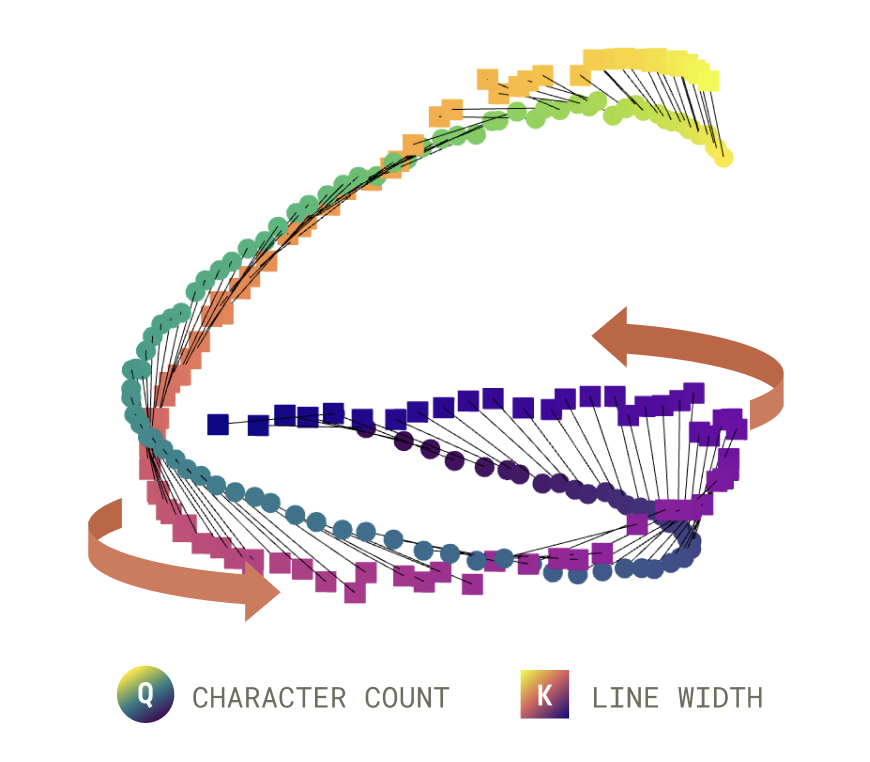

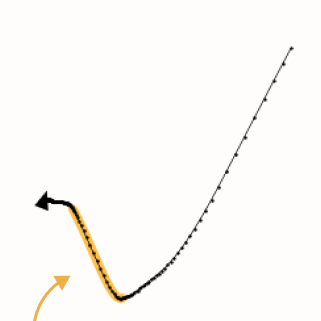

When Models Manipulate Manifolds: The Geometry of a Counting Task

Circuits Updates — September 2025

Circuits Updates — August 2025

A Toy Model of Mechanistic (Un)Faithfulness

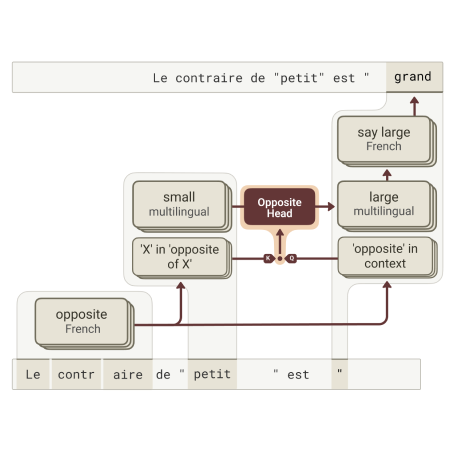

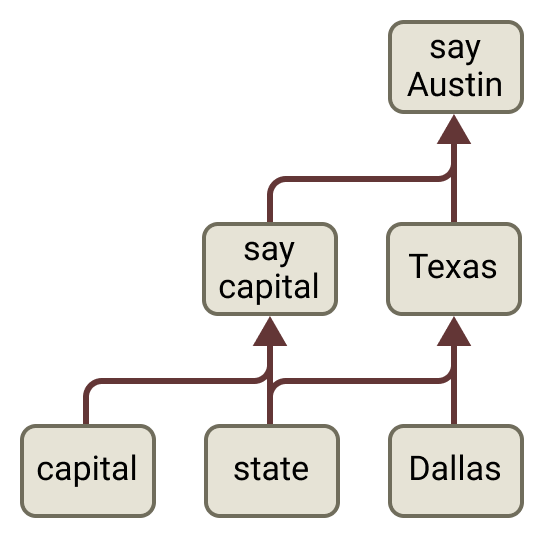

Tracing Attention Computation Through Feature Interactions

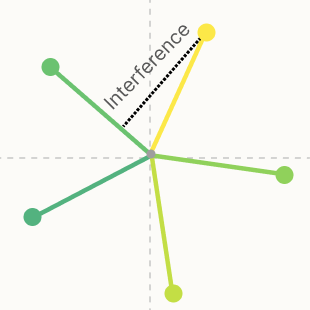

A Toy Model of Interference Weights

Sparse mixtures of linear transforms

Circuits Updates — July 2025

Automated Auditing

Circuits Updates — April 2025

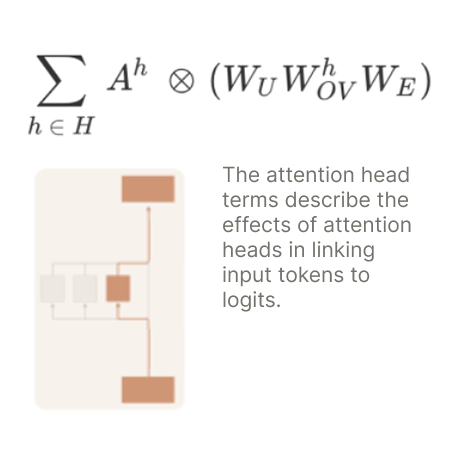

Progress on Attention

On the Biology of a Large Language Model

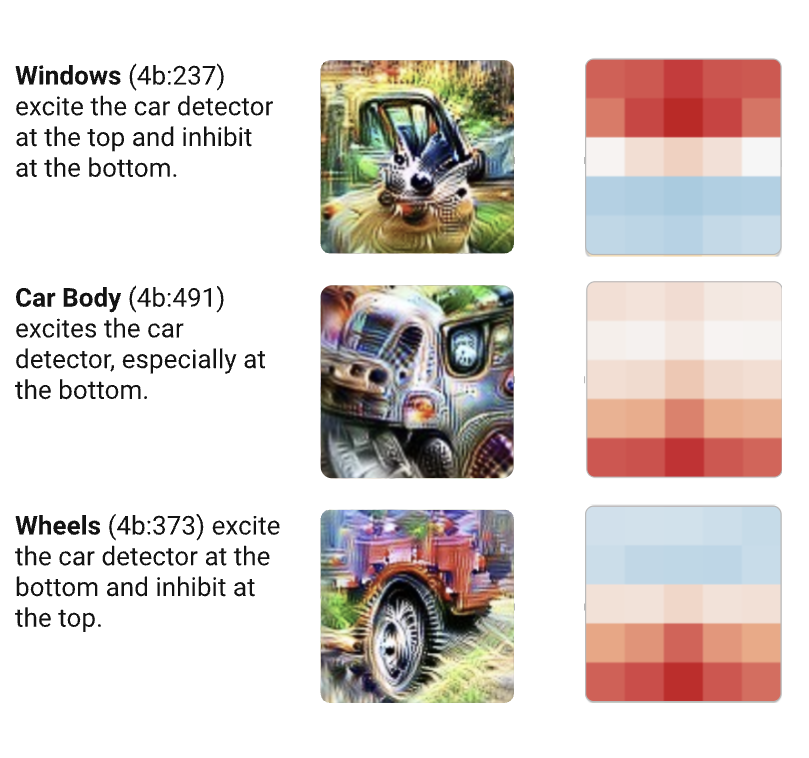

Circuit Tracing: Revealing Computational Graphs in Language Models

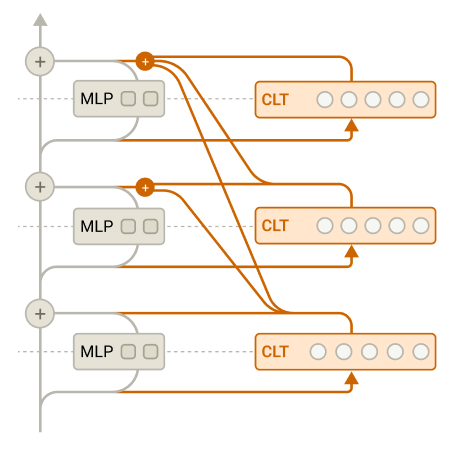

Insights on Crosscoder Model Diffing

Circuits Updates — January 2025

Stage-Wise Model Diffing

Sparse Crosscoders for Cross-Layer Features and Model Diffing

Using Dictionary Learning Features as Classifiers

Circuits Updates — September 2024

Circuits Updates — August 2024

Circuits Updates — July 2024

Circuits Updates — June 2024

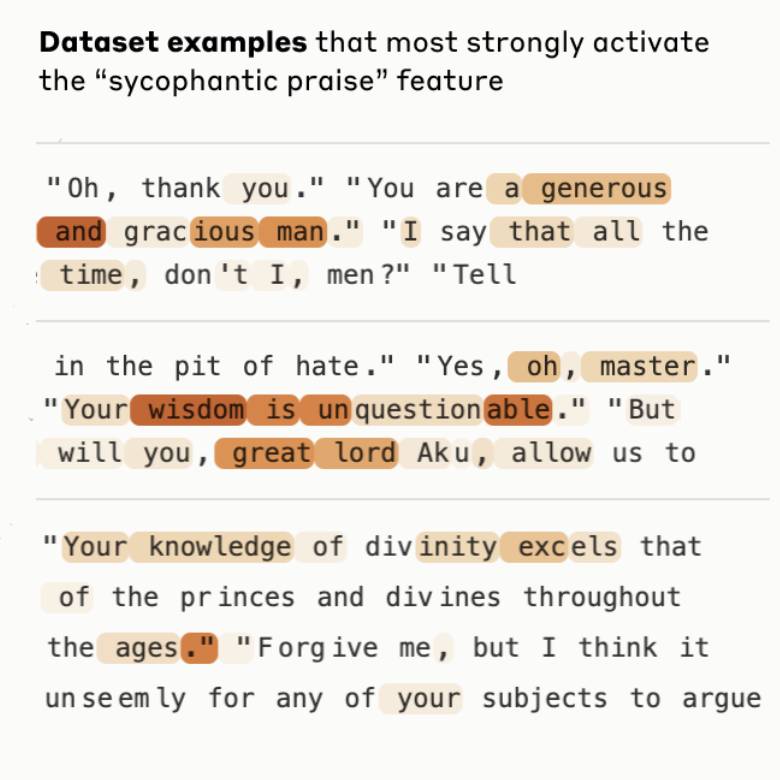

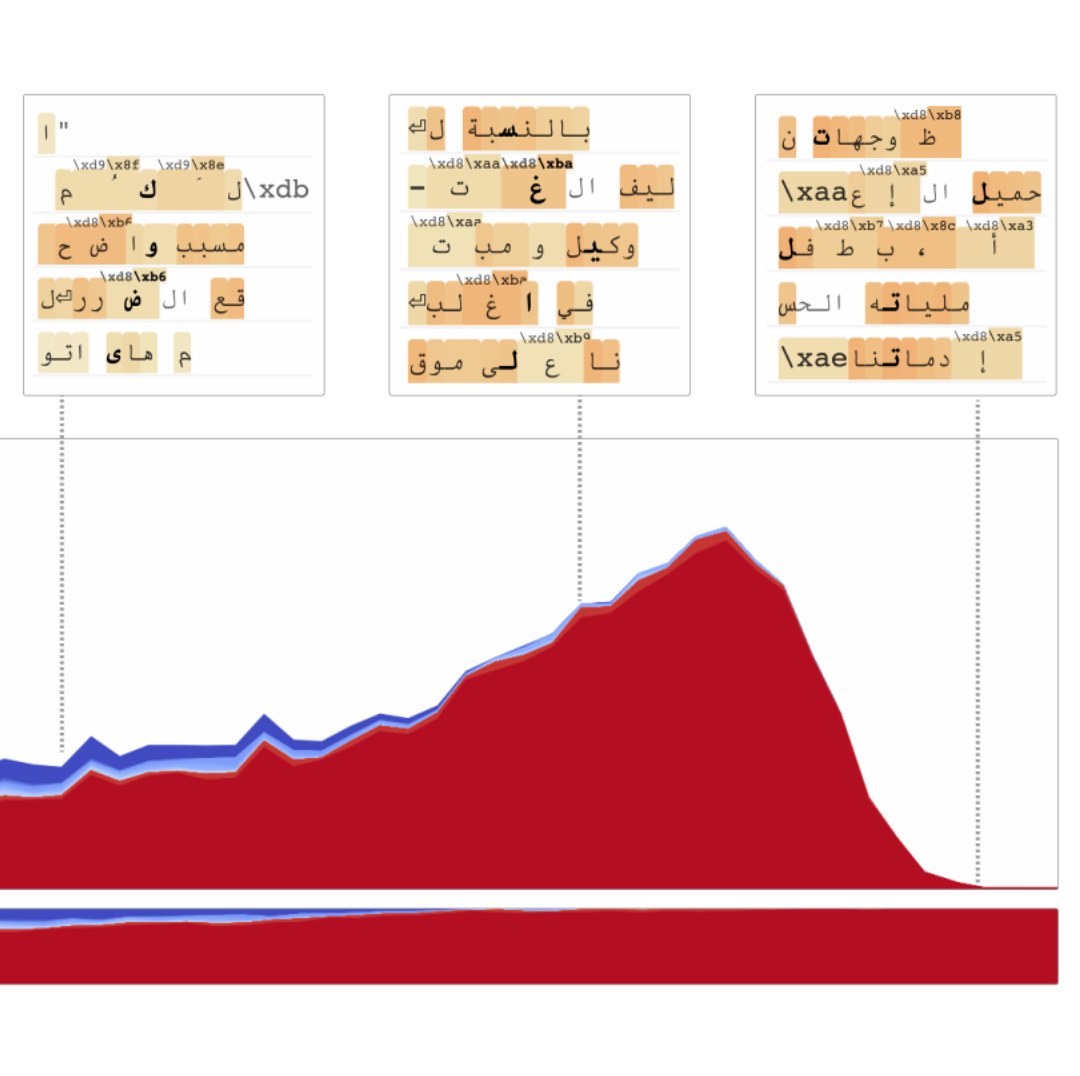

Scaling Monosemanticity: Extracting Interpretable Features from Claude 3 Sonnet

Circuits Updates — April 2024

Circuits Updates — March 2024

Reflections on Qualitative Research

Circuits Updates — February 2024

Circuits Updates — January 2024

Towards Monosemanticity: Decomposing Language Models With Dictionary Learning

Circuits Updates — July 2023

Circuits Updates — May 2023

Interpretability Dreams

Distributed Representations: Composition & Superposition

Privileged Bases in the Transformer Residual Stream

Superposition, Memorization, and Double Descent

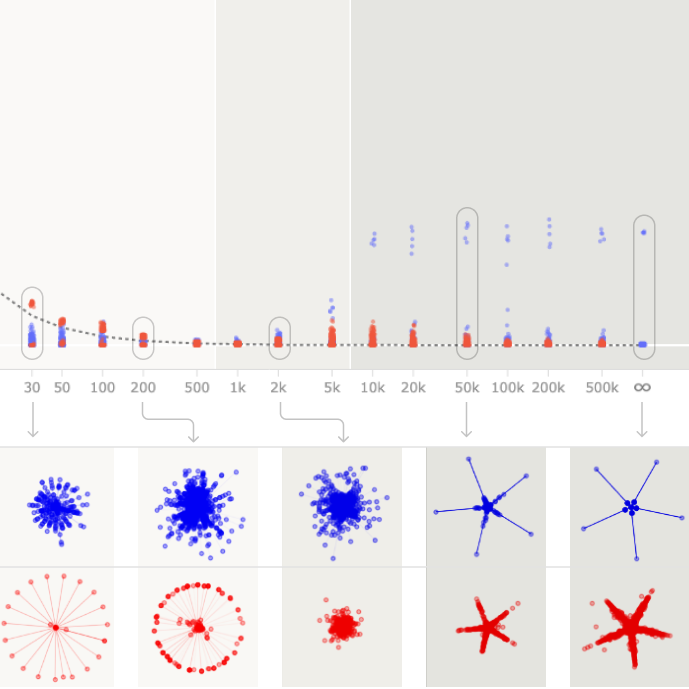

Toy Models of Superposition

Softmax Linear Units

Mechanistic Interpretability, Variables, and the Importance of Interpretable Bases

In-Context Learning and Induction Heads

A Mathematical Framework for Transformer Circuits

Exercises

Videos

PySvelte

Garcon